Electronics Manufacturing Services

Are you tired of poor-quality assembly?

Are you tired of poor-quality assembly?

Are you tired of fighting manufacturing defects?

Do returns kill your business and your reputation?

Poorly assembled electronic part of the product and poorly tested before shipping to the customer result of a breakdown of the device during the warranty period and a huge bill for the replacement of a non-working device with another one, which can also fail. These losses are usually much greater than all your earnings on the device! At least they can be counted… However, how to measure the loss of reputation of your company for customers? How to get the customer to believe in your reliability again? With these questions, our customers come to us first.

Many companies trust us to take care of the electronic manufacturing part of their products. Why? Because it is better to entrust the professionals in such sophisticated field as electronics design.

We begin by conducting an expert evaluation of your product, to identify weaknesses, if necessary, agree with you the changes that will allow the product to be better. We analyze the components and suppliers, because only high-quality components and reliable delivery process will allow you to say that your product is the best on the market. We have huge experience the field of contract manufacturing - and if you want, we will recommend you reliable suppliers, or take this care of this for you.

We begin by conducting an expert evaluation of your product, to identify weaknesses, if necessary, agree with you the changes that will allow the product to be better. We analyze the components and suppliers, because only high-quality components and reliable delivery process will allow you to say that your product is the best on the market. We have huge experience the field of contract manufacturing - and if you want, we will recommend you reliable suppliers, or take this care of this for you.

The process of manufacturing itself requires control that is even more careful. No batch will go into production until we perform a random test of the purchased components. We select components from different parts of the lot bought for production, we collect a small test lot - and we carry out a comprehensive check of the collected prototypes. Only after receiving the approval of our engineers, we start the production of a batch. If a defect is found, it will be corrected before the whole batch is assembled.

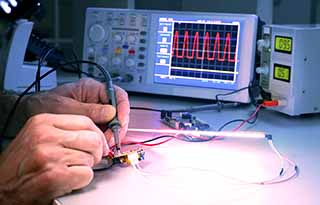

During the preparation of production, our engineers prepare test benches, which will allow to check hundreds of devices at the same time. We conduct an engineering test of each device from the lot before sending it to the customer. The engineering test includes testing of all operating modes of every single device. All sensitive characteristics are tested. The operation in declared ranges of input voltage, temperature conditions, working characteristics are controlled. The working test lasts no less than an hour for each device, after which the devices are visually inspected for defects.

Poor packaging and delivery is a way to damage even an admittedly working batch of devices. We carry out professional packaging of products, and cooperate only with reliable delivery services. Large quantities of shipments allow us to have the maximum discount on the delivery of products.

And the last thing after reading this - you will have a though – “I can not afford it”.

So that's it. YOU CAN AFFORD IT! YOU CAN MAKE IT POSSIBLE TO YOURSELF!

How? Because our main office is in Europe, where the salaries of highly qualified  engineers are lower than in the US. The maintenance of the factory and equipment is also cheaper. These benefits allow us to offer high-quality professional electronics manufacturing services at the price of the usual irresponsible assembly! At the same time, you save a huge amount of money on refunds. And, you earn on increasing your reputation in the eyes of your customers!

engineers are lower than in the US. The maintenance of the factory and equipment is also cheaper. These benefits allow us to offer high-quality professional electronics manufacturing services at the price of the usual irresponsible assembly! At the same time, you save a huge amount of money on refunds. And, you earn on increasing your reputation in the eyes of your customers!

Contact us! We are waiting for you, in the friendly family of our satisfied customers!

{jcomments off}